Most technology companies start with a single cloud provider. With time, they start to adopt the cloud-native functionalities of that cloud. This is expected and completely makes sense. Moving towards cloud-native architectures brings convenience and possibly, cost-efficiency.

But if you are not careful, you may get bound to the cloud provider.

Many would prefer to have an option to switch their cloud provider or more commonly, to run either a part of their product or their environments on another cloud. The reasons may be varied. Your customer may have a preferred cloud hosting clause. You might want to expand to a region dominated by other cloud providers. Other reasons could be data-heaven laws, pricing or a particular service of another cloud that absolutely eases things for you.

Some technology teams value this optionality so much that they forgo cloud-native functionalities and operate just like they would on a typical traditional data center. This adds up to the tooling needs significantly, making the infrastructure expensive. After all, the cloud was never meant to be run like a traditional data center!

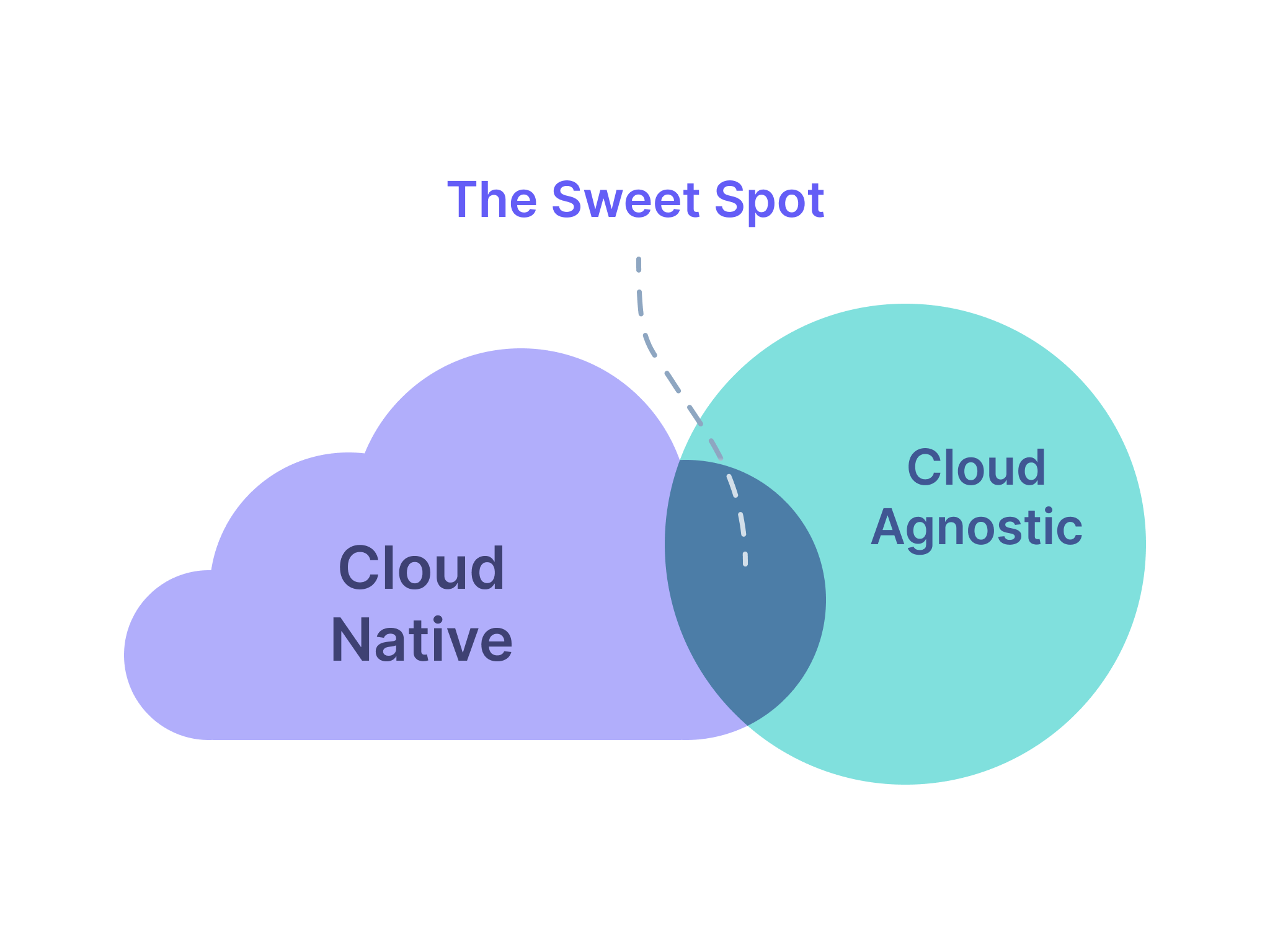

Having the best of both worlds isn't very hard. One needs to follow some design guidelines from both Dev and Ops sides.

These are best practices even if you choose to be on a particular cloud forever.

Dev Guidelines and Best Practices

It all boils down to following simple decision models. We like to think of them as "blue cloud" and "grey cloud".

- Protocol compliant cloud-native resources: As an example, AWS Aurora is a cloud-native MySQL provided by AWS which is absolutely okay to use. Applications don't need to know it is an Aurora instance or a vanilla MySQL hosted on a bare EC2 machine. This is "Blue cloud" but shifts to the grey zone if you start to make the assumptions on specific Aurora features. For instance, the read replica is a lower latency version of master-slave replication and design your apps accordingly (make assumptions on the latency in the application). It isn't so and may not have solutions across open-source/other cloud providers. Similarly, if you modify your applications to use the aurora data apis, it tends to move towards a grey zone!

- Reliable and Popular cloud components: There are services provided by cloud providers which are unique and widely popular like S3. Usually, most cloud providers will have a look-alike of this type of services like Azure Blob or Google cloud storage. They offer similar/same features with different APIs. It is absolutely worth using them but need to be managed. The solution is fairly trivial that do not build deep tie-ups but build utility layers for your functionalities. For such widely popular services, cloud-agnostic wrappers/SDK are common as well, for e.g., MinIO. This would shift it to the "Blue zone" again

- Niche Cloud features: Then there are unique capabilities of each cloud that can bring down your development time significantly. Like, say an S3 select feature can give new capabilities to the object that you already store in S3. You can use this feature by wrapping with micro-services or functional abstractions so you at least can write another cloud-specific implementation if it comes to that. This would help in localizing the change without the need to go everywhere in your whole codebase

OPs Guidelines and Best Practices

In the above cases, we took care of the Dev Part. What about the Ops?

The Ops setup generally includes backup, recovery, code-delivery, observability, security, HA. Instead of completely building them in a cloud-agnostic way, it is prudent to use some of the cloud-native capabilities of each cloud. This would reduce the burden of building everything from scratch in an error-free way.

A few tips while you build your Ops toolchain:

- There should be a central repository of the policies, that should be agnostic of the implementation. The implementation may choose the most reliable method in each of the cloud or manifestations. For e.g., a Disaster recovery policy should pertain to the backups to keep and their frequency, agnostic of the fact that the implementation is an Aurora MySQL or a Self-hosted MySQL in a Linux server

- You should provide a uniform developer experience even if your environments contain a mix of self-hosted or cloud-native components. For e.g., Metrics should be pulled from everywhere and collated in a single source of truth for creating the dashboards and alerts in a uniform way.

- Kubernetes is the first step towards being cloud-native and at the same time being agnostic. However, there should not be any change in the code delivery workflows even if the underlying Kubernetes clusters are cloud provider managed on each cloud.

Conclusion

Being Cloud-agnostic doesn't mean multi-cloud. It doesn't mean migrating to another cloud at will as well.

It simply means if you could host a particular environment of yours in another cloud within a reasonable time, say weeks not years. This would give the necessary optionality of the future without investing in tooling for other clouds. You don't need to sacrifice cloud-native functionalities either, you just need to manage the abstractions well.

We at Facets.cloud are building on the above principles to provide you with the necessary tooling to achieve the best of both worlds. Do write us to know more or collaborate!